Ex Machina is one of the most engrossing, thought-provoking, and unsettling movies I’ve seen in a while. A story that starts off as a Silicon Valley Willy Wonka and ends up a nightmarish retelling of “Bluebeard’s Castle,” it follows a young computer programmer named Caleb (Domhnall Gleeson, who sells the film with his earnest and sympathetic performance) as he is chosen in an “office sweepstakes” to visit the palatial, high-tech hideaway of his brilliant but narcissistic boss, Nathan (given despicable life by Oscar Isaac). Nathan unveils his top-secret work to Caleb: an amazingly realistic female android named Ava (played with unerringly perfect instinct by Alicia Vikander). Caleb’s task, the real reason for his visit, is to see whether Ava, who possesses a breakthrough artificial intelligence, passes the Turing test. Can she be accepted as a human being?

Ex Machina is one of the most engrossing, thought-provoking, and unsettling movies I’ve seen in a while. A story that starts off as a Silicon Valley Willy Wonka and ends up a nightmarish retelling of “Bluebeard’s Castle,” it follows a young computer programmer named Caleb (Domhnall Gleeson, who sells the film with his earnest and sympathetic performance) as he is chosen in an “office sweepstakes” to visit the palatial, high-tech hideaway of his brilliant but narcissistic boss, Nathan (given despicable life by Oscar Isaac). Nathan unveils his top-secret work to Caleb: an amazingly realistic female android named Ava (played with unerringly perfect instinct by Alicia Vikander). Caleb’s task, the real reason for his visit, is to see whether Ava, who possesses a breakthrough artificial intelligence, passes the Turing test. Can she be accepted as a human being?

Writer Alex Garland’s directorial debut, Ex Machina, reminds me a lot of Stanley Kubrick’s 2001. Both movies are powerful meditations on the future of humanity. Both present our species with an existential threat of our own making: artificial intelligence. Both films are visually captivating, with smart stories. (Ex Machina even has its own “monolith,” in the form of a Jackson Pollack canvas).

I’m also certain Ex Machina will reward repeated viewings, as 2001 does. Both movies are, to put it politely, real “mind-messers!” Here are some of the themes that grabbed me as I watched Ex Machina for the first time.

God Doesn’t Work Solo

One of Ex Machina’s most thematically important bits of dialogue occurs early on, just after Nathan lets Caleb in on the nature of his work. True artificial intelligence, Caleb says, would be the greatest invention in history—and not the history of men, but of gods. It’s not too long until Nathan misquotes Caleb’s words back at him: “You said it yourself: ‘You’re not a man, but a god.’” Confusion between God and human, Creator and creature, hardly ever ends well in science fiction!

Nathan certainly asserts himself as a Supreme Being. He lives in a high-tech pleasure palace in a wilderness setting so remote it takes more than two hours of helicopter flight over acres and acres of mountains and forests to reach it—he’s claimed a huge swath of nature as his own private fiefdom, because he’s rich enough and he can. He uses and abuses the female robots he’s manufactured for his own gratification—again, because he can. And despite his stated goal of developing true AI, he doesn’t grant these robots personhood; they remain his property, to be enjoyed briefly, broken, and “recycled” into the next model. He even tries, in effect, to strip Caleb of personhood, too—because he is Caleb’s boss and (all together now) he can.

“I am God,” Nathan says at one point. But his idea of “God” is a solitary, self-sufficient, all-powerful brute force accountable to no code of morality and answerable to no one, entitled to use anything and anyone as he sees fit. Nathan’s concept of “God” isn’t the God revealed in Jesus Christ: the God of Israel whose name forever is “merciful and gracious, slow to anger, and abounding in steadfast love and faithfulness” (Exodus 34.6)… the triune God of Father, Son, and Holy Spirit, who is in God’s own inner life a loving, self-giving community, interacting with all that God has made in loving, self-giving ways.

Greedy, deceitful, and selfish, Nathan the “god” doesn’t give life to a world that exists apart from himself. He creates simulacra of life (and maybe they are more than that) yet remains alone in his programmable world. He’s no god. Caleb is right when he describes Nathan with the words Oppenheimer spoke after the first atomic bomb testing: “I am become Death, the destroyer of worlds.”

What Makes Us Human?

Nathan brings Caleb to the estate (in parody of God placing the first man in the garden, Genesis 2.15) and presents him with Ava (in parody of God presenting a woman to that man, 2.22). He wants Caleb to test Ava, to learn if she can pass as human. But it turns out Caleb is the one being tested. Nathan was testing Caleb to see if Caleb would fall in love with Ava. He even went so far as to base Ava’s appearance on Caleb’s “pornography profile.” (“Hey,” Nathan tells him—in one of the lines that got the biggest laughs at the screening I attended—“if a search engine’s good for nothing else…” Interestingly, the name of Nathan’s search engine company, “Blue Book,” was also the name for late-19th century directories of prostitutes in several American cities). Such prurient, manipulative “testing” is also common behavior for false gods: “In wondrous ways,” wrote Plautus, “the gods make sport of men…”

Caleb’s real tester, however, is Ava herself. The physical setting of her room reinforces this fact. During each interview, Caleb sits in a narrow, transparent box—a none-too-subtle visual cue that he is “in the dock” and Ava is his judge. Over the course of their “sessions” (the beginning of each demarcated for the audience by 2001-esque stark white type on a black screen), Ava “judges” Caleb to be funny, kind, and genuinely concerned for her. Whether she does so because, as Nathan claims, she has been programmed to like Caleb; or because, again as Nathan claims, Caleb is the only man other than her “father” she’s ever seen; or, as I think the movie more than suggests, because she is sentient, the verdict is the same: Caleb is truly human. He is human by virtue of his empathy and capacity for real relationships with other people (even synthetic ones).

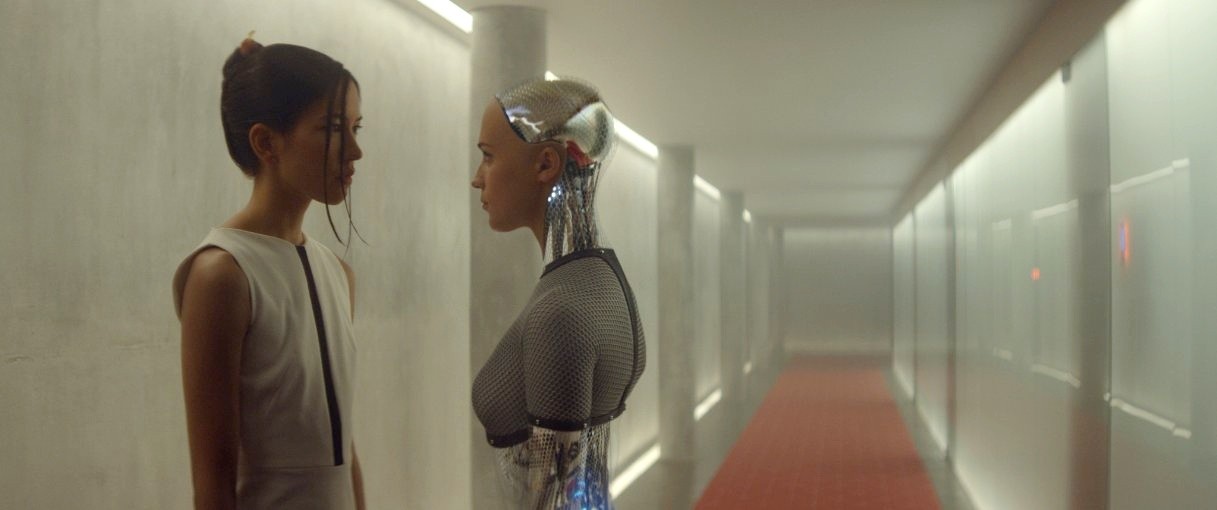

Nathan is wrong about much in this movie, but he’s not wrong—not entirely—to claim Caleb is no less “programmed” than Ava. Humans are programmed for relationship. It is not good for us to be alone (Genesis 2.18). To be created in the image of God is to be created to be connected with others. Nathan only connects with people—“real” or otherwise—in superficial ways, giving nothing of himself away (and, as a consequence, one senses there is very little to give; the more of ourselves we keep to ourselves, the less we ever have to offer). He is, in the movie’s moral vocabulary, a “bastard.” The word is evocative: Nathan, despite his biological humanity, is not legitimate. He is less of a person than the “people” he’s created. They at least seem to feel genuine pain, genuine sorrow—and they can connect with others. What’s the first thing Ava does when she escapes her room? She encounters Kyo-Kyo, another android, in the hall, and embraces and kisses her. The moment is not titillating, but telling, further proof of Ava’s true humanity, in stark contrast to the sub-humanity of her maker.

Bound for Extinction?

Given its negative depiction of Nathan and its positive portrayal of Caleb, I thought Ex Machina might end up affirming humanity’s nature as a relational species. Based on the way the film builds up Caleb and Ava’s connection, I thought it might even hint, Star Trek-style, that human existence will evolve to include relationships with AIs.

I should have known better! Nathan puts the movie’s cards plainly on the table when he tells Caleb (not long after Caleb recites that Oppenheimer quote) that AIs will replace human beings. His vision of humanity’s extinction sets the stage for the film’s gut-punching final moments.

The friend with whom I saw Ex Machina objected vehemently to Ava’s abandonment of Caleb. She leaves him behind, alone, a de facto prisoner in Nathan’s locked-down house, while she takes the pre-arranged helicopter ride Caleb was supposed to take back to civilization. It wasn’t fair, my friend said. Caleb was a good guy! No way should be left behind to die.

It’s not fair. But given the movie’s structure and premises, it’s inevitable. Like 2001, Ex Machina is a story about evolution. Unlike Kubrick, however, Garland gives us no reassurance that we, homo sapiens as we know ourselves, will be the ones to make the next move up the evolutionary ladder. We don’t get to see ourselves as the Star Child, transposed to a higher plane of existence. Instead, we watch one who is our child, a product of our own technological prowess, replace us. We watch as Ava clothes herself in skin from one of the robots who preceded her—one of the androids Nathan used and then threw away—and stand before a full-length mirror looking at herself, literally as naked as the day she was born, which is that very day. She is a new life, and new life is neither good nor evil; it acts to survive, moving out of darkness into light to meet the world.

Did Ava manipulate Caleb into helping her escape? Yes, but not maliciously; that behavior, too, was part of her survival. As Nathan cautions Caleb, never fall for the trick of misdirection: “Don’t be fooled by the magician’s hot assistant.” Ava looks like a young woman, but she is really a baby, and she’s no more or less moral than any infant. Had the film shown us Ava hesitating to leave, deliberating whether to take Caleb with her, we might fairly judge her a villain. But we are never in doubt that Nathan is the bad guy; Caleb, Ava, and the other robots are his victims. Ava is simply the only one who survives.

The Pressing Question

Ex Machina affirms that, yes, morality sets humanity apart from machines. But it also claims that morality is not enough to ensure our species’ survival. It is, in effect, an extended homily on the text we’ve heard recently from such thinkers as Stephen Hawking and Elon Musk: If we choose to create artificial intelligence, we will be sealing our own fate, dooming ourselves to extinction.

What can Christians say in reply? I don’t have a full response, but I think we can say at least two things. First, the reality of sin drives us to acknowledge, “Well, it’s possible.” When God sets the choice between life and death before us, we are not always good at choosing life. We don’t always choose what’s best for ourselves, for our neighbors, for our society, for the world. Like Nathan, we often set ourselves up as would-be “gods.” If we do that in the realm of artificial intelligence, we may well be setting ourselves up for disaster.

But, second: We can choose. We are not a sinless species, but we are also not stupid. God has gifted us with the ability to use resources and create new realities wisely. If we can, like Caleb, maintain our morality and our capacity for relationship—if we can model our God-given humanity to humanity’s artificially intelligent “children”—then we might be able to avoid the fate Ex Machina predicts.

In the end, I don’t think Ex Machina’s most pressing question is, “How will we treat our sentient robots?,” but “How will we treat each other?” We don’t have to wait for technology to produce AI to discover whether we will relate to unique, different, valuable lives more as Caleb than as Nathan does. Such lives are already around us.

What did you think of Ex Machina?

EX MACHINA movie poster found at IMDB. Image of Caleb and Ava found at ART OF VFX (http://www.artofvfx.com/?p=9784). Other images from EX MACHINA official website (http://www.exmachinamovie.co.uk/).

I disagree with your analysis of why Nathan is so evil and unlike the God of the Bible.

Remember, Nathan is still in the “garden” busy creating the “human” and is not yet sure he created a suitable version. Seems your comments are for the Bible God after he are tossed the humans out of the garden. How “loving” was that process? How forgiving? It wasn’t….they broke a rule and were condemned.

I am a Christian but I wanted to write becasue I saw that you “transposed” post-fall recollections of the Bible God upon Nathan. We don’t know what the Bible God was like before he kicked humans out of his garden. If he did some fine tuning to get the final human, we shouldn’t suppose we know what that fine tuning process was like.